I was looking throuI came across are :

DBpedia - from our one and only wikipedia - you dont know their value until you come across a situation like what i have come across.

FreeBase - Google is everywhere..Here too they have their presenc by acquiring FreeBase

LinkedGeoData - Beatifuly captures the geographical data.AFIK it gets data from openstreet map.Another reason to use openstreet :)

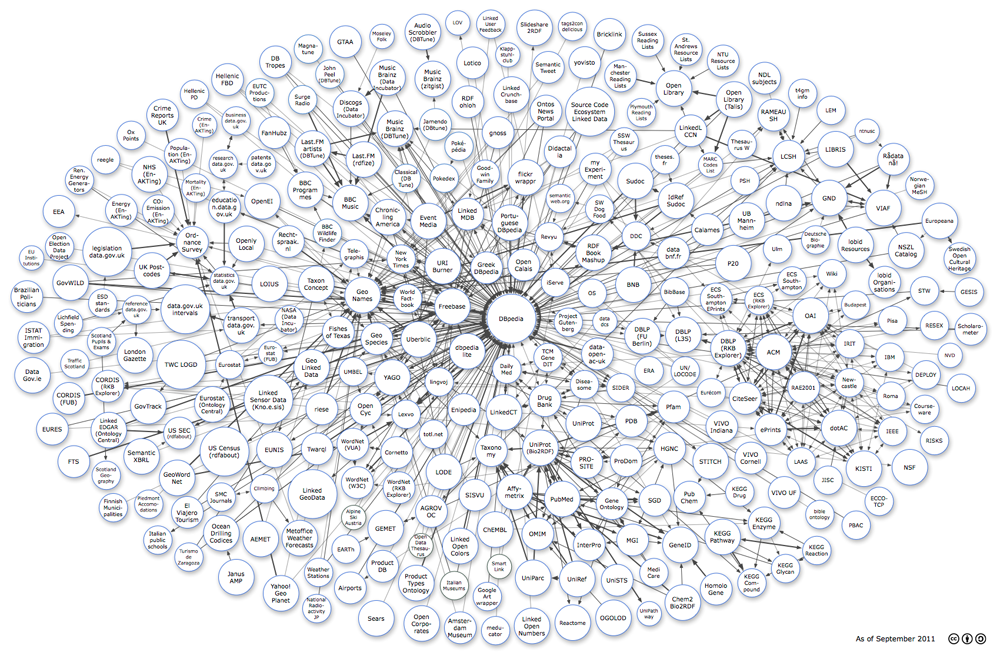

Now to get a view of the intelligent web-3.0 just view here

As Tim Berner Lee sees : this is the infant stage of one of the human biggest asset. The intelligent web that is gona replace the Document web of web2.0. And you can see the biggest data endpoints are DBPedia and Freebase. Sad thing is no other organisation is contributing to it. As usual all the research and support are primarly driven by Universities in Europe.A big cheer for them!!

SO my quest was to pull data on a particular topic from this intelligent web. There is one more attration to this web-3.0 its fully available for download in your desktop :). But under the condition when you make use of it the info you create should also be free...aka Creative Commons.

Now DBPedia provides sparql based query endpoints to do queries.You can accesss them at - http://dbpedia.org/snorql/

For a simple query to bring all naturalPlaces in wikipedia here is it -

PREFIX dbpedia3: <http://dbpedia.org/ontology/>

select distinct

* where { ?b dbpedia2:type :NaturalPlace .

optional {?b dbpedia3:type :NaturalPlace . }}

Volla you ask how is it possible to query the web ..hehe thats the truth of web-3.0 its not just of viewing the dumb documents..But its all about firing sparql queries..

Now bad news for RDBMS lovers..afterall Relational Schema was just meant for Enterprise DB not human knowledge :).In this case you need descriptive logic.. If you have learned machine learning and AI this field of mathmatics of axioms and instances should be familiar to you.

For Freebase its different . Thay dont have sparql endpoints.As usual Google is evil :) even if they say "dont be evil". In FreeBase case the data is available as tsv format.Hard time now i need to make them into semantic format aka rdf ya nt format.

It doesnt end their..My agony is growing - These datasets have very few info about India(Some disadvntages when you develop from a developing nation). So some guys Human rights or NGO's planning ofr a data collection next time try using the web-3.0 based techniques..Its worth the effort i guarentee..Atleast it will beuseful for guys like me lurking for data in the LOD cloud.If you want merge with the LOD cloud they have some very simple norms -

- There must be resolvable http:// (or https://) URIs.

- They must resolve, with or without content negotiation, to RDF data in one of the popular RDF formats (RDFa, RDF/XML, Turtle, N-Triples).

- The dataset must contain at least 1000 triples. (Hence, your FOAF file most likely does not qualify.)

- The dataset must be connected via RDF links to a dataset that is already in the diagram. This means, either your dataset must use URIs from the other dataset, or vice versam. We arbitrarily require at least 50 links.

- Access of the entire dataset must be possible via RDF crawling, via an RDF dump, or via a SPARQL endpoint.

SO happy coding!!